Custom background shaders

Any custom background shaders to render onto XR device screens will use a display matrix to transform the CPU side image texture coordinates. Reference this manual page for the display matrix format and derivation.

XR vertex shader operation

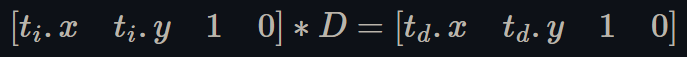

The vertex shaders will perform the following mathematical operation, where "ti" are the texture coordinates of the CPU side image, "D" is the display matrix, and "td" are the texture coordinates of XR device's screen:

Use the display matrix and image texture coordinates to find the output device coordinates

GLSL example code

For GLSL vertex shaders, the following line will transform image texture coordinates to device screen coordinates:

outputTextureCoord = (vec4(gl_MultiTexCoord0.x, gl_MultiTexCoord0.y, 1.0f, 0.0f) * _UnityDisplayTransform).xy;

where _UnityDisplayTransform is the display matrix and gl_MultiTexCoord0 are the 2D texture coordinates of the image.

Note, the GLSL * operator is overloaded to use a row vector when a matrix is premultiplied by a vector. Refer to this doc for more information: https://en.wikibooks.org/wiki/GLSL_Programming/Vector_and_Matrix_Operations#Operators

HLSL example code

For HLSL vertex shaders, the following line will transform image texture coordinates to device screen coordinates:

outputTextureCoord = mul(float3(v.texcoord, 1.0f), _UnityDisplayTransform).xy;

where _UnityDisplayTransform is the display matrix and v are the 2D texture coordinates of the image.

Note, the HLSL function mul() is overloaded to use a row vector when a matrix is premultiplied by a vector and to still perform the operation without the 0 in the last index of the row vector, as it is not necessary for the final output texture coordinates. Refer to this doc for more information: https://learn.microsoft.com/en-us/windows/win32/direct3dhlsl/dx-graphics-hlsl-mul