GELU¶

- class torch.nn.GELU(approximate='none')[source]¶

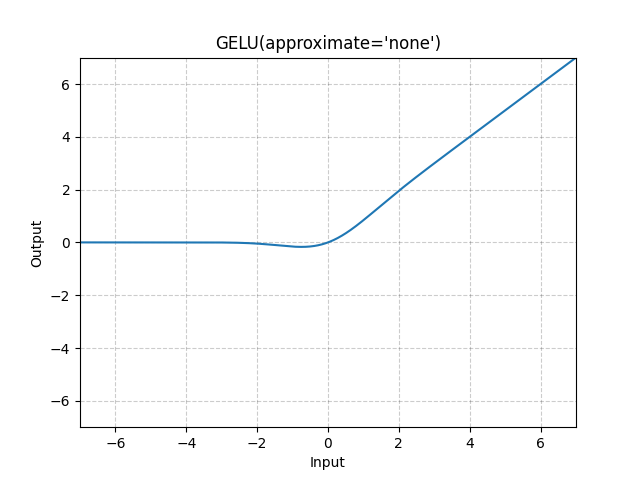

Applies the Gaussian Error Linear Units function.

\[\text{GELU}(x) = x * \Phi(x) \]where \(\Phi(x)\) is the Cumulative Distribution Function for Gaussian Distribution.

When the approximate argument is ‘tanh’, Gelu is estimated with:

\[\text{GELU}(x) = 0.5 * x * (1 + \text{Tanh}(\sqrt{2 / \pi} * (x + 0.044715 * x^3))) \]- Parameters:

approximate (str, optional) – the gelu approximation algorithm to use:

'none'|'tanh'. Default:'none'

- Shape:

Input: \((*)\), where \(*\) means any number of dimensions.

Output: \((*)\), same shape as the input.

Examples:

>>> m = nn.GELU() >>> input = torch.randn(2) >>> output = m(input)