For the degradation image model theory and the Wiener filter theory you can refer to the tutorial Out-of-focus Deblur Filter. On this page only a linear motion blur distortion is considered. The motion blur image on this page is a real world image. The blur was caused by a moving subject.

The point spread function (PSF) of a linear motion blur distortion is a line segment. Such a PSF is specified by two parameters: \(LEN\) is the length of the blur and \(THETA\) is the angle of motion.

On this page the Wiener filter is used as the restoration filter, for details you can refer to the tutorial Out-of-focus Deblur Filter. In order to synthesize the Wiener filter for a motion blur case, it needs to specify the signal-to-noise ratio ( \(SNR\)), \(LEN\) and \(THETA\) of the PSF.

#include <iostream>

void help();

void calcPSF(Mat& outputImg,

Size filterSize,

int len,

double theta);

void fftshift(const Mat& inputImg, Mat& outputImg);

void filter2DFreq(const Mat& inputImg, Mat& outputImg, const Mat& H);

void calcWnrFilter(const Mat& input_h_PSF, Mat& output_G, double nsr);

void edgetaper(const Mat& inputImg, Mat& outputImg, double gamma = 5.0, double beta = 0.2);

"{help h usage ? | | print this message }"

"{image |input.png | input image name }"

"{LEN |125 | length of a motion }"

"{THETA |0 | angle of a motion in degrees }"

"{SNR |700 | signal to noise ratio }"

;

int main(

int argc,

char *argv[])

{

help();

CommandLineParser parser(argc, argv, keys);

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

int LEN = parser.get<int>("LEN");

double THETA = parser.get<double>("THETA");

int snr = parser.get<int>("SNR");

string strInFileName = parser.get<

String>(

"image");

if (!parser.check())

{

parser.printErrors();

return 0;

}

Mat imgIn;

if (imgIn.empty())

{

cout << "ERROR : Image cannot be loaded..!!" << endl;

return -1;

}

Mat imgOut;

Rect roi =

Rect(0, 0, imgIn.cols & -2, imgIn.rows & -2);

Mat Hw, h;

calcPSF(h, roi.size(), LEN, THETA);

calcWnrFilter(h, Hw, 1.0 / double(snr));

imgIn.convertTo(imgIn,

CV_32F);

edgetaper(imgIn, imgIn);

filter2DFreq(imgIn(roi), imgOut, Hw);

imgOut.convertTo(imgOut,

CV_8U);

return 0;

}

void help()

{

cout << "2018-08-14" << endl;

cout << "Motion_deblur_v2" << endl;

cout << "You will learn how to recover an image with motion blur distortion using a Wiener filter" << endl;

}

void calcPSF(Mat& outputImg,

Size filterSize,

int len,

double theta)

{

Point point(filterSize.width / 2, filterSize.height / 2);

outputImg = h / summa[0];

}

void fftshift(const Mat& inputImg, Mat& outputImg)

{

outputImg = inputImg.clone();

int cx = outputImg.cols / 2;

int cy = outputImg.rows / 2;

Mat q0(outputImg,

Rect(0, 0, cx, cy));

Mat q1(outputImg,

Rect(cx, 0, cx, cy));

Mat q2(outputImg,

Rect(0, cy, cx, cy));

Mat q3(outputImg,

Rect(cx, cy, cx, cy));

Mat tmp;

q0.copyTo(tmp);

q3.copyTo(q0);

tmp.copyTo(q3);

q1.copyTo(tmp);

q2.copyTo(q1);

tmp.copyTo(q2);

}

void filter2DFreq(const Mat& inputImg, Mat& outputImg, const Mat& H)

{

Mat planes[2] = { Mat_<float>(inputImg.clone()), Mat::zeros(inputImg.size(),

CV_32F) };

Mat complexI;

merge(planes, 2, complexI);

Mat planesH[2] = { Mat_<float>(H.clone()), Mat::zeros(H.size(),

CV_32F) };

Mat complexH;

merge(planesH, 2, complexH);

Mat complexIH;

idft(complexIH, complexIH);

split(complexIH, planes);

outputImg = planes[0];

}

void calcWnrFilter(const Mat& input_h_PSF, Mat& output_G, double nsr)

{

Mat h_PSF_shifted;

fftshift(input_h_PSF, h_PSF_shifted);

Mat planes[2] = { Mat_<float>(h_PSF_shifted.clone()), Mat::zeros(h_PSF_shifted.size(),

CV_32F) };

Mat complexI;

merge(planes, 2, complexI);

Mat denom;

pow(

abs(planes[0]), 2, denom);

denom += nsr;

divide(planes[0], denom, output_G);

}

void edgetaper(const Mat& inputImg, Mat& outputImg, double gamma, double beta)

{

int Nx = inputImg.cols;

int Ny = inputImg.rows;

float* p1 = w1.ptr<float>(0);

float* p2 = w2.ptr<float>(0);

float dx = float(2.0 *

CV_PI / Nx);

for (int i = 0; i < Nx; i++)

{

p1[i] = float(0.5 * (

tanh((x + gamma / 2) / beta) -

tanh((x - gamma / 2) / beta)));

x += dx;

}

float dy = float(2.0 *

CV_PI / Ny);

for (int i = 0; i < Ny; i++)

{

p2[i] = float(0.5 * (

tanh((y + gamma / 2) / beta) -

tanh((y - gamma / 2) / beta)));

y += dy;

}

Mat w = w2 * w1;

}

MatExpr abs(const Mat &m)

Calculates an absolute value of each matrix element.

void split(const Mat &src, Mat *mvbegin)

Divides a multi-channel array into several single-channel arrays.

void mulSpectrums(InputArray a, InputArray b, OutputArray c, int flags, bool conjB=false)

Performs the per-element multiplication of two Fourier spectrums.

void divide(InputArray src1, InputArray src2, OutputArray dst, double scale=1, int dtype=-1)

Performs per-element division of two arrays or a scalar by an array.

Scalar sum(InputArray src)

Calculates the sum of array elements.

void merge(const Mat *mv, size_t count, OutputArray dst)

Creates one multi-channel array out of several single-channel ones.

void normalize(InputArray src, InputOutputArray dst, double alpha=1, double beta=0, int norm_type=NORM_L2, int dtype=-1, InputArray mask=noArray())

Normalizes the norm or value range of an array.

void multiply(InputArray src1, InputArray src2, OutputArray dst, double scale=1, int dtype=-1)

Calculates the per-element scaled product of two arrays.

void idft(InputArray src, OutputArray dst, int flags=0, int nonzeroRows=0)

Calculates the inverse Discrete Fourier Transform of a 1D or 2D array.

void dft(InputArray src, OutputArray dst, int flags=0, int nonzeroRows=0)

Performs a forward or inverse Discrete Fourier transform of a 1D or 2D floating-point array.

void pow(InputArray src, double power, OutputArray dst)

Raises every array element to a power.

@ NORM_MINMAX

flag

Definition: core/include/opencv2/core/base.hpp:207

@ DFT_SCALE

Definition: core/include/opencv2/core/base.hpp:231

Rect2i Rect

Definition: modules/core/include/opencv2/core/types.hpp:496

Point2i Point

Definition: modules/core/include/opencv2/core/types.hpp:209

std::string String

Definition: cvstd.hpp:149

Size2i Size

Definition: modules/core/include/opencv2/core/types.hpp:370

Scalar_< double > Scalar

Definition: modules/core/include/opencv2/core/types.hpp:709

#define CV_8U

Definition: core/include/opencv2/core/hal/interface.h:73

#define CV_32F

Definition: core/include/opencv2/core/hal/interface.h:78

Quat< T > tanh(const Quat< T > &q)

int cvRound(double value)

Rounds floating-point number to the nearest integer.

Definition: fast_math.hpp:200

#define CV_PI

Definition: cvdef.h:380

@ IMREAD_GRAYSCALE

If set, always convert image to the single channel grayscale image (codec internal conversion).

Definition: imgcodecs.hpp:70

CV_EXPORTS_W bool imwrite(const String &filename, InputArray img, const std::vector< int > ¶ms=std::vector< int >())

Saves an image to a specified file.

CV_EXPORTS_W Mat imread(const String &filename, int flags=IMREAD_COLOR_BGR)

Loads an image from a file.

void ellipse(InputOutputArray img, Point center, Size axes, double angle, double startAngle, double endAngle, const Scalar &color, int thickness=1, int lineType=LINE_8, int shift=0)

Draws a simple or thick elliptic arc or fills an ellipse sector.

@ FILLED

Definition: imgproc/include/opencv2/imgproc.hpp:891

int main(int argc, char *argv[])

Definition: highgui_qt.cpp:3

Definition: core/include/opencv2/core.hpp:107

A motion blur image recovering algorithm consists of PSF generation, Wiener filter generation and filtering a blurred image in a frequency domain:

A function calcPSF() forms a PSF according to input parameters \(LEN\) and \(THETA\) (in degrees):

A function edgetaper() tapers the input image’s edges in order to reduce the ringing effect in a restored image:

The functions calcWnrFilter(), fftshift() and filter2DFreq() realize an image filtration by a specified PSF in the frequency domain. The functions are copied from the tutorial Out-of-focus Deblur Filter.

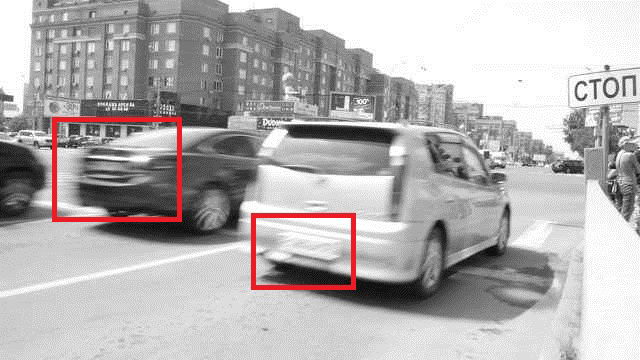

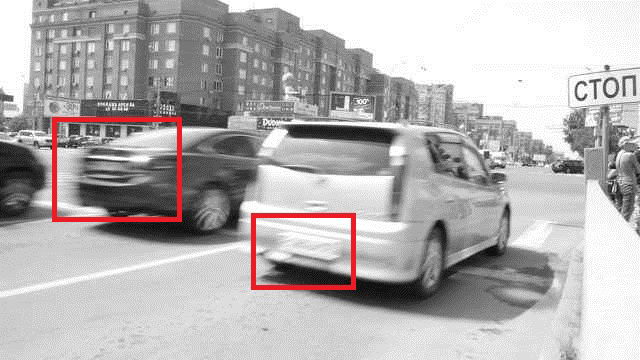

Below you can see the real world image with motion blur distortion. The license plate is not readable on both cars. The red markers show the car’s license plate location.

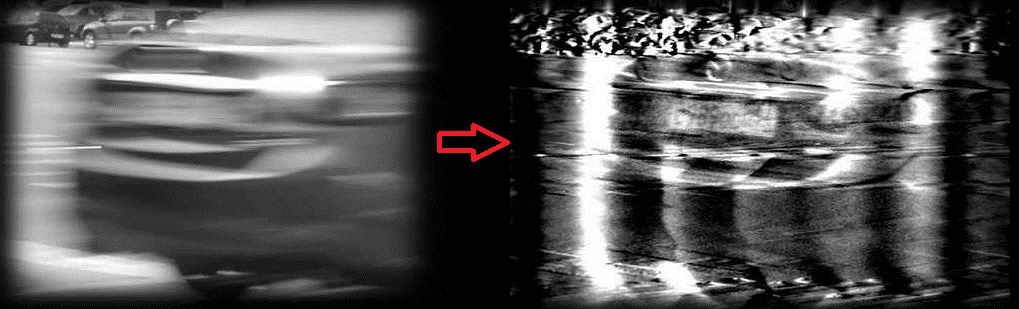

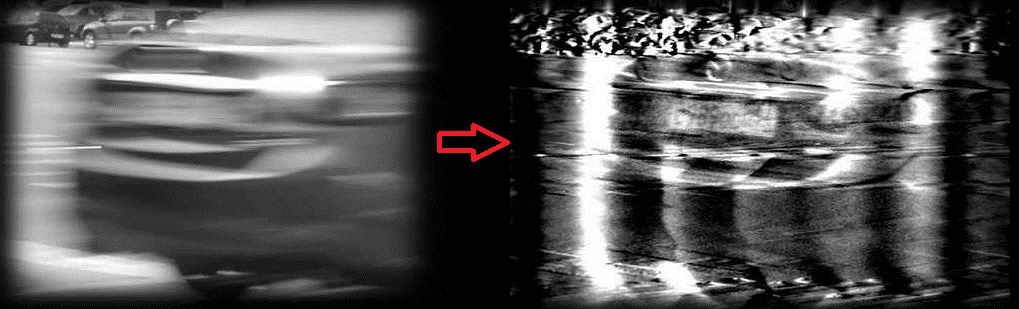

Below you can see the restoration result for the black car license plate. The result has been computed with \(LEN\) = 125, \(THETA\) = 0, \(SNR\) = 700.

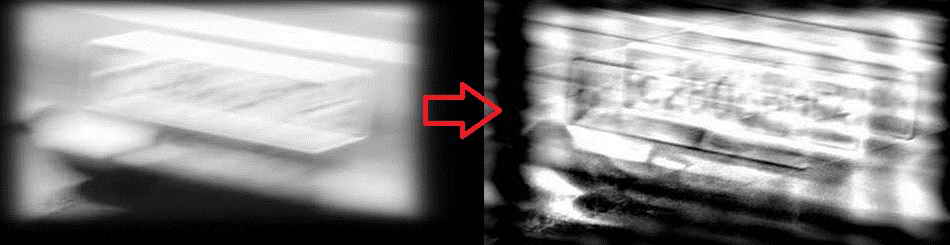

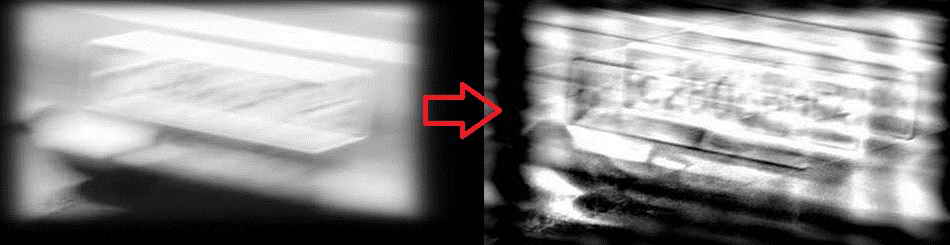

Below you can see the restoration result for the white car license plate. The result has been computed with \(LEN\) = 78, \(THETA\) = 15, \(SNR\) = 300.

The values of \(SNR\), \(LEN\) and \(THETA\) were selected manually to give the best possible visual result. The \(THETA\) parameter coincides with the car’s moving direction, and the \(LEN\) parameter depends on the car’s moving speed. The result is not perfect, but at least it gives us a hint of the image’s content. With some effort, the car license plate is now readable.

You can also find a quick video demonstration of a license plate recovering method YouTube.